Statistical learning is the cornerstone of modern data‑driven engineering: from predicting the fatigue life of structural components, to diagnosing faults in power plants, to optimizing sensor layouts in autonomous vehicles. This course introduces the core ideas of statistical learning with an emphasis on practical application in engineering contexts.

The class is application‑driven: proofs are optional, and the focus is on understanding assumptions, interpreting results, and making data‑based decisions in engineering contexts.

By the end of this course, students will:

-

Conceptual Understanding

- Grasp the statistical foundations of least‑squares estimation, regularization, and discriminant analysis.

- Identify the assumptions underlying each method and assess their suitability for a given engineering problem.

-

Practical Implementation

- Implement ordinary least squares, ridge, lasso, weighted least squares, and generalized linear models using Python (NumPy, SciPy, scikit‑learn).

- Apply logistic regression, LDA, QDA, and naïve Bayes classifiers to binary engineering data sets.

-

Model Evaluation & Validation

- Use cross‑validation, bootstrap, and out‑of‑sample testing to evaluate predictive performance.

- Interpret residual plots, ROC curves, confusion matrices, and information criteria in engineering terms.

-

Feature Engineering & Selection

- Build and transform predictor variables from raw engineering measurements (e.g., Fourier transforms of vibration data, time‑to‑failure estimates).

- Apply variable selection techniques (forward/backward selection, Lasso path) to reduce dimensionality while preserving predictive power.

-

Communication of Results

- Generate clear, engineering‑focused reports and presentations that translate statistical metrics into actionable insights for non‑statistical stakeholders.

Students are expected to have:

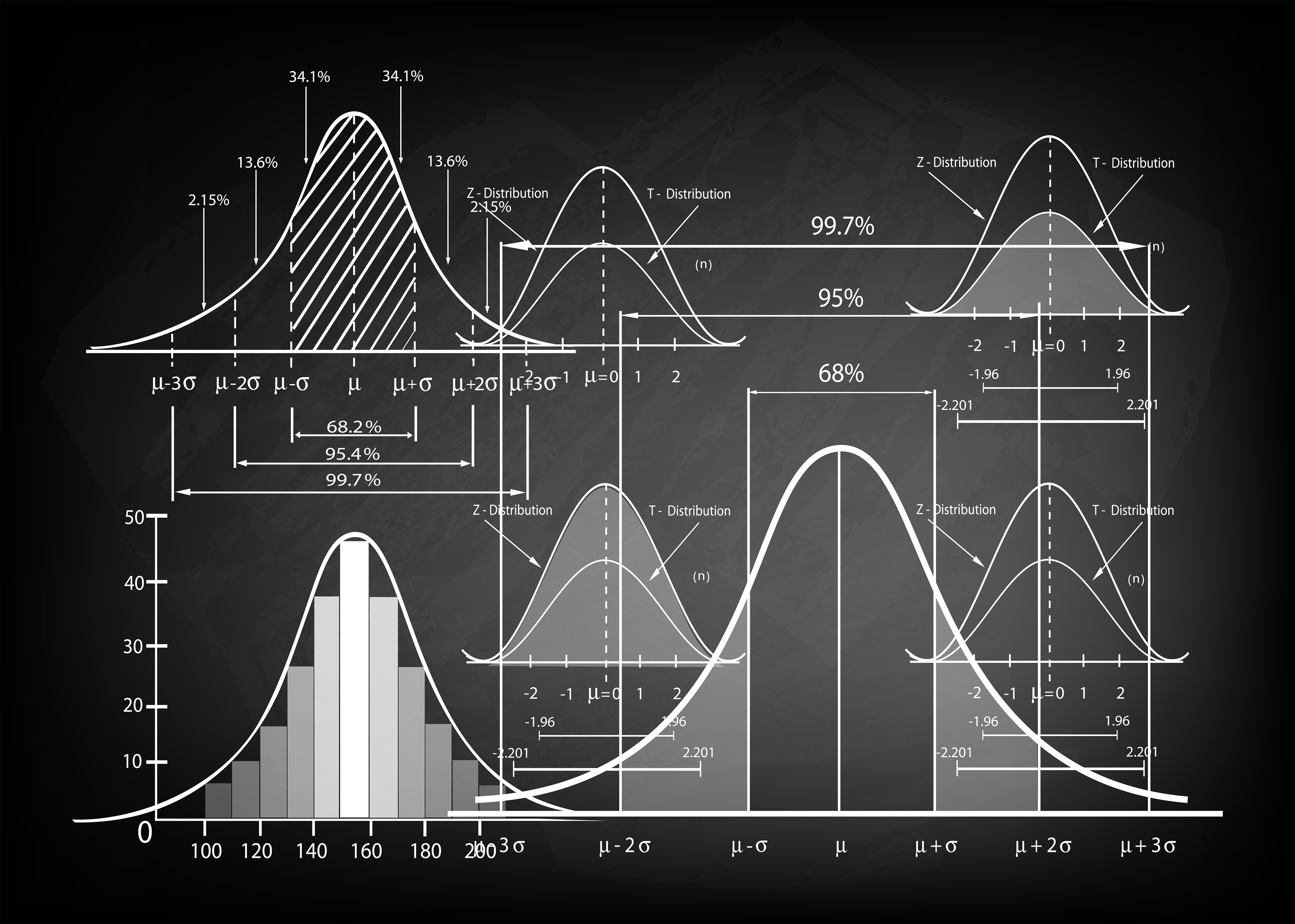

- Mathematics: Linear algebra (matrix operations, eigen‑decomposition), calculus (basic differentiation), and probability basics (distributions, expectation).

- Programming: Familiarity with Python (or MATLAB/Octave) and libraries for data manipulation (pandas).

- Statistics: Introductory statistics covering mean, variance, hypothesis testing.

- Engineering Background: At least one course in mechanics, thermodynamics, or signal processing is highly recommended to appreciate the data sets we will analyze.

Upon successful completion, students will be able to:

| # | Outcome | Assessment Evidence |

|---|---|---|

| 1 | Model Specification | Write equations for OLS, ridge, lasso, logistic regression; explain role of regularization parameters. |

| 2 | Data Preprocessing | Clean a noisy engineering data set, engineer features, and justify choices in a lab notebook. |

| 3 | Model Fitting | Fit models in Python, report coefficients, and assess assumptions via residual diagnostics. |

| 4 | Regularization & Tuning | Select λ in ridge/lasso using cross‑validation; justify selection of λ via plots or grid search. |

| 5 | Classification | Build and compare logistic regression, LDA, QDA, naïve Bayes models; interpret classification reports. |

| 6 | Evaluation Metrics | Compute MSE, R², ROC AUC, confusion matrix; discuss their relevance in engineering risk assessment. |

| 7 | Model Interpretation | Translate coefficient signs into engineering interpretations (e.g., higher temperature → lower fatigue life). |

| 8 | Reporting | Present a complete analysis (data, model, diagnostics, recommendations) in a 10‑slide deck and a written report. |

| 9 | Ethical & Practical Considerations | Discuss overfitting, bias, and limitations of statistical models in safety‑critical engineering systems. |

The course is taught jointly by Ismaïl Ben Hassan-Saïdi and Jean-Christophe Loiseau and is divided into two complementary parts.

- PART I : ???

- Lecture 1 : ???

- Topics covered : ???

- Lecture 2 : ???

- Topics covered : ???

- Lecture 3 : ???

- Topics covered : ???

- Lecture 4 : ???

- Topics covered : ???

- Lecture 1 : ???

- PART II : Statistical modelling and design of experiment

- Lecture 1 : Least-squares estimators and the bias-variance trade-off

- Topics covered : ordinary, weighted, generalized and regularized least-squares. Bias and variance of a statistical model.

- Lecture 2 : Model selection

- Topics covered : Cross-validation and bootstrapping

- Lecture 3 : Binary classification problems

- Topics covered : Bayes classifier, logistic regression, Linear Discriminant Analysis (LDA) and Naïve Bayes Classifier.

- Lecture 4 : Some elements of design of experiments

- Topics covered : Full factorial, fractional and optimal designs

- Lecture 1 : Least-squares estimators and the bias-variance trade-off

This short course is partly inspired by the online course Statistical Learning with Python by Stanford University. The companion books can be found here. In particular, we will make use of

- An introduction to statistical learning, with applications in Python by James et al. Although the book is edited by Springer, the authors have received the authorization to distribute the pdf online for free.

Owing to the relatively limited number of hours dedicated to this class, only chapters 1 to 4 will be of interest, as well as chapter 6. Students are expected to have read these by the end of the class. Weekly homeworks will be made of exercises taken directly from the book. We thus recommend you to download it as soon as you have access to this course.

Steven Brunton, math educator and professor at the University of Washington (Seattle, USA), has a Youtube channel with a set of videos of interest for this course:

Probability & Statistics Bootcamp Introduction to statistics and data analysis

Regarding fraud: To minimize the risk of fraud, students are required to obey the following rules:

- Telephones, tablets, personal computers, or other communication devices must be stored away during the exam.

- Unless explicitly specified, no documents are allowed during the exam.

- Although we cannot enforce it, completing homework should be considered a personal effort. If any suspicion is detected, you may be called in for an oral exam where you will be expected to justify any mathematical statement written in your of

Pythonprogram.

Failure to comply might result in a penalty. Moreover, if cheating is detected, it will be reported to the school and actions will be taken, possibly resulting in an automatic failure for this class.